Three Concerns in Software Systems

Three Concerns in Software Systems As we start the system design journey first I would really recommend you to check Designing Data-Intensive Applications or as people in tech community called it the big red pig book :D Most of the posts in the fundamentals were actually inspired by this book and it’s great author Martin Kleppmann.

First of all is important to note that many applications are data-intensive, versus compute-intensive. There are few types of building blocks we use to create data-intensive applications:

- Storing data for a later retrieval (databases)

- Memoize expensive computation (cache)

- Search or filter by specific keywords (search indexes)

- Asynchronous message sending (stream processing)

- Process large amounts of data over certain time interval (batch processing)

For software systems there are three concerns that are important:

- Reliability The system should work correctly even in the face of software/human’s faults.

- Scalability As the system grows, we should make sure there are procedures handling that growth

- Maintainability At different period of times different people will be working on the system. We ought to have system which are easily maintainable.

Reliability

“Anything that can go wrong will go wrong”. Murphy’s law

The straightforward definition of reliability is property of system to continue working when faced with faults. Faults are things that can go wrong, and we call systems that can handle them fault-tolerant, resilient or reliable system. It’s important to note the difference between failure and fault. Fault is one system component’s deviation from its spec while failure is situation where the whole system isn’t capable of providing services to user. How do we test system for resiliency? One of the utilities I found amazing was Netflix Chaos Monkey . Chaos Monkey is responsible for randomly terminating instances in production to ensure that engineers implement their services to be resilient to instance failures. Faults can be categorized in the following three categories:

- Hardware Faults To cope with this fault type in most cases we utilize redundancy. In other words adding additional hardware components in order to reduce the probability of system failure. During the reboot of one machine system can still be available and update and reboot one node while the redundant one takes its place.

- Software Errors Hard to detect and unlike hardware faults can easily trigger casdading failure. Small fault in one component triggers fault in an another, and then cascading chain could make the whole system unavailable. No silver bullet for solving these fault types, but what we should do is think about assumptions and interactions in the system, do proper testing, simulation of crashes, measuring and monitoring system behavior.

- Human Errors We should set up monitoring processes and check the heart beat of our system by collecting performance (cpu, memory consumption…) and error rates (the process is also called telemetry) to enable us to quickly isolate fault points. Allowing for a quick and easy recovery from problems while also minimizing the space for human errors.

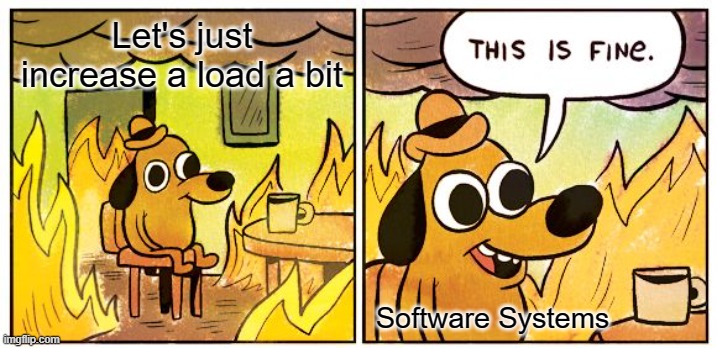

Scalability

We use term scalability to describe property or level of system to handle increased load. To describe load we can use load parameters which can be anything from cache hit rate, database read/write ratio, number of request to a web server per unit of time. Let’s go over some quick example: Just for demonstrating how we choose a load parameter let’s take Twitter user timeline generation. When a user posts a tweet, we get the list of all the user who follow the original poster and insert new tweet into their cached timelines. This moves the boundary between read and write paths, in order words we spend more time on writing to make the reading operation faster. One of the important load parameters for this scenario is the number of followers per user, since it often happens that we have celebrity/hot users and inserting a tweet into timelines of all of their followers would take too long. One approach would be to detect celebrity users and then during the timeline generation for any user we would merge their cached list of tweets (containing tweets from non-celebrity users) with separately fetched tweets from other celebrity users.

We can describe performance by asking ourselves two questions (two different ways):

- How is the system performance affected if we keep the system resources unchanged and increase the load parameter?

- To keep the performance unchanged how much do you have to increase the resources after load increase?

It’s important to note the difference between latency and response time: The response time is what the client see, request processing time along with network delays and queueing delays. Latency is the duration a request is waiting to be handled—during which it is latent. To describe performance and availability of service (e.g. service level objectives SLOs and service level agreements SLAs) we often use percentiles: if the 95th percentile response time is 1.5 seconds, that means 95 out of 100 requests take less than 1.5 seconds. During load testing is to important to take care of tail latency amplification (slowest parallel requests makes the request slow, critical path in other words), to cope with this load-generating client should be sending request independently of the response time. Histograms are the right way of aggregating response time data.

You often hear about difference between scaling up (vertical scaling, leveling up to a better machine) and scaling out (horizonal scaling, load distributions over smaller machines). Distributing load across multiple machines is also known as a shared-nothing architecture. Today it is often the case to have elastic systems, which automatically detect load increase/decrease and add or remove machines (nodes).

Maintainability

Maintainability can be broken down to three concepts:

- Operability: Making Life Easy for Operations. Make it easy for operations teams to keep the system running smoothly.

- Simplicity: Managing Complexity. Make it easy for new engineers to understand the system, by removing as much complexity as possible from the system.

- Evolvability: Making Change Easy. Make it easy for engineers to make changes to the system in the future, adapting it for unanticipated use cases as requirements change.

A project with high complexity is sometimes called bit ball of mud. Reducing complexity greatly improves the maintainability of software, so simplicity should be a key goal when building systems. Implementing good abstractions can help reduce complexity and make the system easier to evolve.

We can define complexity as accidental if it is not inherent in the problem that the software solves (as seen by the users) but arises only from the implementation. It’s also important to note the difference between functional and nonfunctional requirements as our system evolves:

- Functional requirements (what should the system do? storing, retrieving and processing data)

- Non-functional requirements (general properties like security, reliability, compliance, scalability, compatibility, and maintainability)